C++与 OpenCV 实现完整的 CNN 神经网络

头文件部分

除了管理各层的部分 NeuralNetwork,其他部分应该是不言自明的。

#pragma once

#include <iostream>

#include <opencv2/opencv.hpp>

#include <vector>

#include <memory>

#include <algorithm>

#include <random>

using namespace cv;

using namespace std;

// 打印矩阵尺寸

void printMat(const string& name, const cv::Size& size);

// 基础层类

class Layer {

public:

virtual void forward(const Mat& input, Mat& output) = 0;

virtual void backward(const Mat& grad_output, Mat& grad_input) = 0;

virtual void updateWeights(float learning_rate) = 0;

virtual void updateWeightsAdam(float learning_rate, int t, float beta1 = 0.9, float beta2 = 0.999, float eps = 1e-8) = 0;

private:

// Adam parameters

Mat m_weights, v_weights; // 一阶和二阶矩估计 - 权重

Mat m_biases, v_biases; // 一阶和二阶矩估计 - 偏差

};

// 卷积层

class ConvLayer : public Layer {

public:

ConvLayer(int filters, int kernel_size, int stride, int padding, int type);

void forward(const Mat& input, Mat& output) override;

void backward(const Mat& grad_output, Mat& grad_input) override;

void updateWeights(float learning_rate) override;

void updateWeightsAdam(float learning_rate, int t, float beta1 = 0.9, float beta2 = 0.999, float eps = 1e-8) override;

private:

vector<Mat> kernels;

Mat grad_kernel;

Mat input_cache;

int kernel_size;

int filters; // 核的数量

int stride;

int padding;

int type;

Mat biases, grad_biases;

// Adam parameters

Mat m_weights, v_weights; // 一阶和二阶矩估计 - 权重

Mat m_biases, v_biases; // 一阶和二阶矩估计 - 偏差

};

// ReLU激活层

class ReLULayer : public Layer {

public:

void forward(const Mat& input, Mat& output) override;

void backward(const Mat& grad_output, Mat& grad_input) override;

void updateWeights(float learning_rate) override;

void updateWeightsAdam(float learning_rate, int t, float beta1 = 0.9, float beta2 = 0.999, float eps = 1e-8) override;

private:

Mat input_cache;

// Adam parameters

Mat m_weights, v_weights; // 一阶和二阶矩估计 - 权重

Mat m_biases, v_biases; // 一阶和二阶矩估计 - 偏差

};

// 池化层

class PoolingLayer : public Layer {

public:

PoolingLayer(int pool_size, int stride, int padding);

void forward(const Mat& input, Mat& output) override;

void backward(const Mat& grad_output, Mat& grad_input) override;

void updateWeights(float learning_rate) override;

void updateWeightsAdam(float learning_rate, int t, float beta1 = 0.9, float beta2 = 0.999, float eps = 1e-8) override;

private:

Mat input_cache;

int pool_size;

int stride;

int padding = 0;

// Adam parameters

Mat m_weights, v_weights; // 一阶和二阶矩估计 - 权重

Mat m_biases, v_biases; // 一阶和二阶矩估计 - 偏差

};

// 全连接层

class FullyConnectedLayer : public Layer {

public:

FullyConnectedLayer(int input_size, int output_size);

void forward(const Mat& input, Mat& output) override;

void backward(const Mat& grad_output, Mat& grad_input) override;

void updateWeights(float learning_rate) override;

void updateWeightsAdam(float learning_rate, int t, float beta1 = 0.9, float beta2 = 0.999, float eps = 1e-8) override;

private:

Mat weights;

Mat biases;

Mat grad_weights;

Mat grad_biases;

Mat input_cache;

// Adam parameters

Mat m_weights, v_weights; // 一阶和二阶矩估计 - 权重

Mat m_biases, v_biases; // 一阶和二阶矩估计 - 偏差

};

class SoftmaxLayer : public Layer {

public:

void forward(const Mat& input, Mat& output) override;

void backward(const Mat& grad_output, Mat& grad_input) override;

void updateWeights(float learning_rate) override;

void updateWeightsAdam(float learning_rate, int t, float beta1 = 0.9, float beta2 = 0.999, float eps = 1e-8) override;

private:

Mat input_cache;

};

// 神经网络类

class NeuralNetwork {

public:

NeuralNetwork::NeuralNetwork();

void addLayer(shared_ptr<Layer> layer);

void forward(const Mat& input, Mat& output);

void backward(const Mat& loss_grad);

void updateWeights(float learning_rate);

void train(const Mat& inputs, const Mat& labels, int epochs, float learning_rate);

void train(const std::vector<cv::Mat>& images, const cv::Mat& labels, int epochs, float learning_rate);

private:

vector<shared_ptr<Layer>> layers;

vector<Mat> layerOutputs;

};

实现文件与详细解析

卷积层

ConvLayer::ConvLayer(int filters, int kernel_size, int stride, int padding, int type)

: filters(filters), kernel_size(kernel_size), stride(stride), padding(padding), type(type) {

// 初始化卷积核,输入是几个通道,核就是几个通道,一个核会输出一个特征图,最终特征图的通道数为核的个数。

for (int f = 0; f < filters; ++f) {

Mat one_kernel = Mat::zeros(kernel_size, kernel_size, type);

initializeWeightsXavier(one_kernel);

kernels.push_back(one_kernel);

}

// 初始化偏差

biases = Mat::zeros(1, filters, type);

initializeWeights(biases);

// 初始化梯度矩阵

grad_kernel = Mat::zeros(kernel_size, kernel_size, type);

grad_biases = Mat::zeros(1, filters, type);

// 初始化Adam优化器相关的矩阵

m_weights = Mat::zeros(kernel_size, kernel_size, type);

v_weights = Mat::zeros(kernel_size, kernel_size, type);

m_biases = Mat::zeros(1, filters, type);

v_biases = Mat::zeros(1, filters, type);

}

void ConvLayer::forward(const Mat& input, Mat& output) {

input.copyTo(input_cache); // 反向传播用

// 对每个通道进行padding

Mat input_padded;

copyMakeBorder(input, input_padded, padding, padding, padding, padding, BORDER_CONSTANT, 0);

// 分通道进行卷积

std::vector<Mat> input_channels(input_padded.channels(), Mat(input_padded.rows, input_padded.cols, CV_32F)); // 单通道的输入

std::vector<Mat> output_channels(input_padded.channels()); // 用于临时存储特征图计算出的每个通道,此处可能不对,容器大小需要改成filters

split(input_padded, input_channels);

// 预先定义最终输出的大小

int output_rows = (input.rows - kernel_size + 2 * padding) / stride + 1;

int output_cols = (input.cols - kernel_size + 2 * padding) / stride + 1;

for (int f = 0; f < filters; ++f) {

Mat temp_map = Mat::zeros(output_rows, output_cols, CV_32F); // 用于临时存储单个通道的特征图

Mat temp = Mat::zeros(input_padded.rows, input_padded.cols, CV_32F); // 用于临时做filter2D的输出

std::vector<Mat> one_kernel_channels(kernels[f].channels(), Mat::zeros(kernels[f].rows, kernels[f].cols, CV_32F));

split(kernels[f], one_kernel_channels);

for (int c = 0; c < one_kernel_channels.size(); ++c) {

filter2D(input_channels[c], temp, -1, one_kernel_channels[c]); // 此处是相关操作,在数学上和卷积是等价的。TensorFlow也是这么做的。

temp = temp(Rect(padding, padding, output_cols, output_rows)); // 裁剪

temp_map += temp;

}

output_channels[f] = temp_map;

}

cv::merge(output_channels, output);

input_padded.release();

input_channels.shrink_to_fit();

output_channels.shrink_to_fit();

// 其实这里很多都可以使用引用。

}

void ConvLayer::backward(const Mat& grad_output, Mat& grad_input) {

// 创建带有padding的输入梯度

Mat input_padded;

copyMakeBorder(input_cache, input_padded, padding, padding, padding, padding, BORDER_CONSTANT, 0);

// 将grad_output和input_padded分割成各自的通道

std::vector<Mat> grad_output_channels;

split(grad_output, grad_output_channels);

std::vector<Mat> input_padded_channels;

split(input_padded, input_padded_channels);

// 初始化grad_input和grad_kernel

grad_input = Mat::zeros(input_cache.size(), input_cache.type());

std::vector<Mat> grad_input_channels;

split(grad_input, grad_input_channels);

grad_kernel = Mat::zeros(kernels[0].size(), kernels[0].type());

std::vector<Mat> grad_kernel_channels;

split(grad_kernel, grad_kernel_channels);

// 计算梯度,分滤波器(即卷积核)算

for (int f = 0; f < filters; ++f) {

// 将当前滤波器按通道分隔

std::vector<Mat> kernel_channels;

split(kernels[f], kernel_channels);

// 临时矩阵用于存储输入和kernel的梯度

std::vector<Mat> temp_grad_kernel_channels(kernel_channels.size(), Mat::zeros(kernel_channels[0].size(), kernel_channels[0].type()));

std::vector<Mat> temp_grad_input_channels(input_padded_channels.size(), Mat::zeros(input_padded_channels[0].size(), input_padded_channels[0].type()));

for (int c = 0; c < input_padded_channels.size(); ++c) {

// 手动实现全卷积操作,计算相对于kernel的梯度

Mat rotated_kernel;

flip(grad_output_channels[f], rotated_kernel, -1); // 卷积核旋转180度

for (int i = 0; i <= input_padded_channels[c].rows - rotated_kernel.rows; ++i) {

for (int j = 0; j <= input_padded_channels[c].cols - rotated_kernel.cols; ++j) {

Rect roi(j, i, rotated_kernel.cols, rotated_kernel.rows);

temp_grad_kernel_channels[c].at<float>(i, j) = sum(input_padded_channels[c](roi).mul(rotated_kernel))[0];

}

}

// 计算相对于输入的梯度

Mat flipped_kernel;

flip(kernel_channels[c], flipped_kernel, -1); // 旋转180度

filter2D(grad_output_channels[f], temp_grad_input_channels[c], -1, flipped_kernel);

}

// 累加各个通道的梯度

for (int c = 0; c < input_padded_channels.size(); ++c) {

grad_input_channels[c] += temp_grad_input_channels[c];

grad_kernel_channels[c] += temp_grad_kernel_channels[c];

}

}

for (int f = 0; f < filters; ++f) {

// 算偏置梯度

grad_biases.at<float>(0, f) = cv::sum(grad_output_channels[f])[0];

}

// 将通道合并回grad_kernel和grad_input

cv::merge(grad_kernel_channels, grad_kernel);

cv::merge(grad_input_channels, grad_input);

// 释放不必要的内存

input_padded.release();

grad_output_channels.shrink_to_fit();

grad_kernel_channels.shrink_to_fit();

grad_input_channels.shrink_to_fit();

input_padded_channels.shrink_to_fit();

input_cache.release();

// 一样,这里也是很多可以换为引用

}

// 梯度更新权重

void ConvLayer::updateWeights(float learning_rate) {

for (int i = 0; i < filters; ++i) {

kernels[i] -= learning_rate * grad_kernel;

}

}

// adam的更新权重

void ConvLayer::updateWeightsAdam(float learning_rate, int t, float beta1, float beta2, float eps) {

// 更新一阶和二阶矩估计

for (int i = 0; i < filters; ++i) {

m_weights = beta1 * m_weights + (1 - beta1) * grad_kernel;

v_weights = beta2 * v_weights + (1 - beta2) * grad_kernel.mul(grad_kernel);

// 计算偏差修正

Mat mhat_w, vhat_w;

mhat_w = m_weights / (1 - pow(beta1, t));

vhat_w = v_weights / (1 - pow(beta2, t));

// 更新权重

Mat sqrt_vhat_w;

cv::sqrt(vhat_w, sqrt_vhat_w);

kernels[i] -= learning_rate * mhat_w / (sqrt_vhat_w + eps);

}

m_biases = beta1 * m_biases + (1 - beta1) * grad_biases;

v_biases = beta2 * v_biases + (1 - beta2) * grad_biases.mul(grad_biases);

// 计算偏差修正

Mat mhat_b, vhat_b;

mhat_b = m_biases / (1 - pow(beta1, t));

vhat_b = v_biases / (1 - pow(beta2, t));

// 更新偏差

Mat sqrt_vhat_b;

cv::sqrt(vhat_b, sqrt_vhat_b);

biases -= learning_rate * mhat_b / (sqrt_vhat_b + eps);

}

Relu 激活层

// ReLULayer 实现

void ReLULayer::forward(const Mat& input, Mat& output) {

input.copyTo(input_cache);

max(input, 0, output);

}

void ReLULayer::backward(const Mat& grad_output, Mat& grad_input) {

grad_output.copyTo(grad_input);

grad_input.setTo(0, input_cache <= 0);

input_cache.release();

}

// ReLU 层没有权重,因此不需要更新

void ReLULayer::updateWeights(float learning_rate) { }

void ReLULayer::updateWeightsAdam(float learning_rate, int t, float beta1, float beta2, float eps) {

// ReLU层没有权重和偏差,不需要更新

}

池化层

// 最大值池化的层

PoolingLayer::PoolingLayer(int pool_size, int stride, int padding)

: pool_size(pool_size), stride(stride), padding(padding) {}

void PoolingLayer::forward(const Mat& input, Mat& output) {

input.copyTo(input_cache); // 存下来输入,不然反向传播是不知道原输入最大值的位置的

int out_rows = (input.rows - pool_size + 2 * padding) / stride + 1;

int out_cols = (input.cols - pool_size + 2 * padding) / stride + 1;

std::vector<cv::Mat> input_channels(input.channels());

std::vector<cv::Mat> output_channels(input.channels());

cv::split(input, input_channels);

for (int c = 0; c < input.channels(); ++c) {

output_channels[c] = Mat(out_rows, out_cols, input_channels[c].type());

for (int i = 0; i < out_rows; ++i) {

for (int j = 0; j < out_cols; ++j) {

int start_i = i * stride - padding;

int start_j = j * stride - padding;

int end_i = std::min(start_i + pool_size, input.rows);

int end_j = std::min(start_j + pool_size, input.cols);

start_i = std::max(start_i, 0); // 防止取0

start_j = std::max(start_j, 0);

Rect roi(start_j, start_i, end_j - start_j, end_i - start_i);

Mat subMat = input_channels[c](roi);

double minVal, maxVal;

cv::minMaxLoc(subMat, &minVal, &maxVal);

output_channels[c].at<float>(i, j) = static_cast<float>(maxVal);

}

}

}

cv::merge(output_channels, output);

input_channels.shrink_to_fit();

output_channels.shrink_to_fit();

}

void PoolingLayer::backward(const Mat& grad_output, Mat& grad_input) {

grad_input = Mat::zeros(input_cache.size(), input_cache.type());

std::vector<cv::Mat> input_channels;

std::vector<cv::Mat> grad_input_channels(input_cache.channels());

std::vector<cv::Mat> grad_output_channels;

cv::split(input_cache, input_channels);

cv::split(grad_output, grad_output_channels);

for (int c = 0; c < input_cache.channels(); ++c) {

Mat& grad_input_channel = grad_input_channels[c];

grad_input_channel = Mat::zeros(input_channels[c].size(), input_channels[c].type());

for (int i = 0; i < grad_output.rows; ++i) {

for (int j = 0; j < grad_output.cols; ++j) {

int start_i = i * stride - padding;

int start_j = j * stride - padding;

int end_i = std::min(start_i + pool_size, input_channels[c].rows);

int end_j = std::min(start_j + pool_size, input_channels[c].cols);

start_i = std::max(start_i, 0);

start_j = std::max(start_j, 0);

Rect roi(start_j, start_i, end_j - start_j, end_i - start_i);

Mat subMat = input_channels[c](roi);

double maxVal;

Point maxLoc;

cv::minMaxLoc(subMat, nullptr, &maxVal, nullptr, &maxLoc);

int grad_input_row = maxLoc.y + start_i;

int grad_input_col = maxLoc.x + start_j;

grad_input_channel.at<float>(grad_input_row, grad_input_col) += grad_output_channels[c].at<float>(i, j);

}

}

}

cv::merge(grad_input_channels, grad_input);

input_cache.release();

input_channels.shrink_to_fit();

grad_input_channels.shrink_to_fit();

grad_output_channels.shrink_to_fit();

}

void PoolingLayer::updateWeights(float learning_rate) {

// 池化层没有权重,因此不需要更新

}

void PoolingLayer::updateWeightsAdam(float learning_rate, int t, float beta1, float beta2, float eps) {

// 池化层没有权重和偏差,不需要更新

}

全连接层

// FullyConnectedLayer 实现

// 我没有做batch,所以都是全连接都是展平为单行

FullyConnectedLayer::FullyConnectedLayer(int input_size, int output_size) {

weights = Mat::zeros(output_size, input_size, CV_32F);

biases = Mat::zeros(1, output_size, CV_32F);

initializeWeightsXavier(weights);

initializeWeightsXavier(biases);

//initializeWeightsXavier(weights);

//initializeWeightsXavier(biases);

}

void FullyConnectedLayer::forward(const Mat& input, Mat& output) {

input.copyTo(input_cache);

// 展平输入并确保其类型为 CV_32F

Mat flat_input = input_cache.reshape(1, 1); // 展平为单行

flat_input.convertTo(flat_input, CV_32F);

try {

output = flat_input * weights.t(); // 矩阵乘法

output = output + biases;

DebugPrint("->FullyConnectedLayer forward output size", output.size());

}

catch (const cv::Exception& e) {

cout << "OpenCV error: " << e.what() << endl;

}

catch (const std::exception& e) {

cout << "Standard error: " << e.what() << endl;

}

catch (...) {

cout << "Unknown error occurred during matrix multiplication." << endl;

}

flat_input.release();

}

void FullyConnectedLayer::backward(const Mat& grad_output, Mat& grad_input) {

int input_cache_channels = input_cache.channels();

int input_cache_rows = input_cache.rows;

input_cache.convertTo(input_cache, CV_32F);

// 计算 grad_weights

grad_weights = grad_output.t() * input_cache.reshape(1, 1); // 矩阵乘法

// 计算 grad_biases

grad_output.copyTo(grad_biases);

grad_input = grad_output * weights; // 矩阵乘法

grad_input = grad_input.reshape(input_cache_channels, input_cache_rows);

input_cache.release();

}

void FullyConnectedLayer::updateWeights(float learning_rate) {

Mat lr_times_grad_weights = learning_rate * grad_weights;

weights -= lr_times_grad_weights;

biases -= learning_rate * grad_biases;

}

void FullyConnectedLayer::updateWeightsAdam(float learning_rate, int t, float beta1, float beta2, float eps) {

// 初始化 m 和 v (如果这是第一次调用)

if (m_weights.empty()) {

m_weights = Mat::zeros(grad_weights.size(), grad_weights.type());

v_weights = Mat::zeros(grad_weights.size(), grad_weights.type());

m_biases = Mat::zeros(grad_biases.size(), grad_biases.type());

v_biases = Mat::zeros(grad_biases.size(), grad_biases.type());

}

// 更新一阶和二阶矩估计

m_weights = beta1 * m_weights + (1 - beta1) * grad_weights;

v_weights = beta2 * v_weights + (1 - beta2) * grad_weights.mul(grad_weights);

m_biases = beta1 * m_biases + (1 - beta1) * grad_biases;

v_biases = beta2 * v_biases + (1 - beta2) * grad_biases.mul(grad_biases);

// 计算偏差修正

Mat mhat_w, vhat_w, mhat_b, vhat_b;

cv::divide(m_weights, (1 - pow(beta1, t)), mhat_w);

cv::divide(v_weights, (1 - pow(beta2, t)), vhat_w);

cv::divide(m_biases, (1 - pow(beta1, t)), mhat_b);

cv::divide(v_biases, (1 - pow(beta2, t)), vhat_b);

// 更新权重和偏差

Mat sqrt_vhat_w, sqrt_vhat_b;

cv::sqrt(vhat_w, sqrt_vhat_w);

cv::sqrt(vhat_b, sqrt_vhat_b);

//cout << weights.rows << " " << weights.cols << endl;

// 遍历 weights 的每一行进行更新 ( weights 的每一行表示连接到单个输出神经元的权重)

for (int i = 0; i < weights.rows; ++i) {

weights.row(i) -= learning_rate * mhat_w.row(i) / (sqrt_vhat_w.row(i) + eps);

}

biases -= learning_rate * mhat_b / (sqrt_vhat_b + eps);

}

神经网络层(管理层)

// NeuralNetwork 实现

NeuralNetwork::NeuralNetwork() {

// 预先分配 layerOutputs 的内存空间,layerOutputs 的大小比 layers 多 1,用于存放网络的输入

layerOutputs.resize(layers.size() + 1);

}

void NeuralNetwork::addLayer(shared_ptr<Layer> layer) {

layers.push_back(layer);

// 在添加层之后重新分配 layerOutputs 的内存空间

layerOutputs.resize(layers.size() + 1);

}

void NeuralNetwork::forward(const Mat& input, Mat& output) {

// 第一层的输入是网络的输入

input.copyTo(layerOutputs[0]);

// 调用每一层的 forward 函数

for (size_t i = 0; i < layers.size(); ++i) {

//cout << "NeuralNetwork forward switching!" << endl;

layers[i]->forward(layerOutputs[i], layerOutputs[i + 1]);

//cout << "NeuralNetwork forward switch finished!" << endl;

}

// 最后一层的输出是网络的输出

output = layerOutputs.back();

}

void NeuralNetwork::backward(const Mat& loss_grad) {

Mat current_grad = loss_grad;

Mat next_grad;

for (auto it = layers.rbegin(); it != layers.rend(); ++it) {

(*it)->backward(current_grad, next_grad);

current_grad = next_grad;

}

}

void NeuralNetwork::updateWeights(float learning_rate) {

// 遍历调用每个层的 updateWeights

for (const auto& layer : layers) {

// layer->updateWeights(learning_rate);

layer->updateWeightsAdam(learning_rate, 1, 0.9, 0.999, 1e-8);

}

}

void NeuralNetwork::train(const std::vector<cv::Mat>& images, const cv::Mat& labels, int epochs, float learning_rate) {

// Generate an index vector that will be shuffled for random order in each epoch.

std::vector<int> indices(images.size());

for (int i = 0; i < indices.size(); ++i) {

indices[i] = i; // Initialize with consecutive integers.

}

for (int epoch = 0; epoch < epochs; ++epoch) {

double totalLoss = 0.0;

double frontLoss = 0.0;

// Shuffle the indices at the beginning of each epoch.

cv::randShuffle(indices);

for (size_t i = 0; i < indices.size(); ++i) {

int idx = indices[i]; // Get the next index in the shuffled order.

// Forward pass

cv::Mat output;

forward(images[idx], output);

//cout << output << endl; // 打印单次的输出

// Extract one-hot encoded label

cv::Mat currentLabel = labels.row(idx);

// Compute loss

double loss_value = crossEntropyLossV2(output, currentLabel);

totalLoss += loss_value;

// Backward pass

cv::Mat loss_grad = crossEntropyLossGradV2(output, currentLabel);

//cout << "start backword" << endl;

backward(loss_grad);

// Update weights

updateWeights(learning_rate);

//system("pause");

}

// Print average loss over the epoch

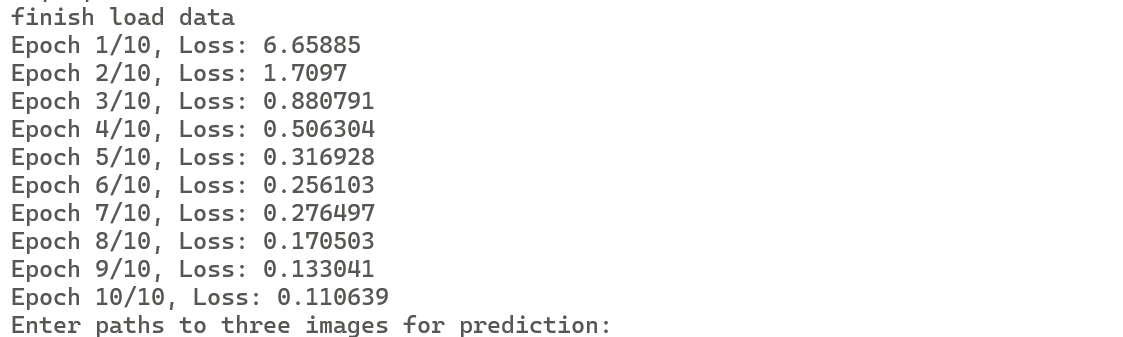

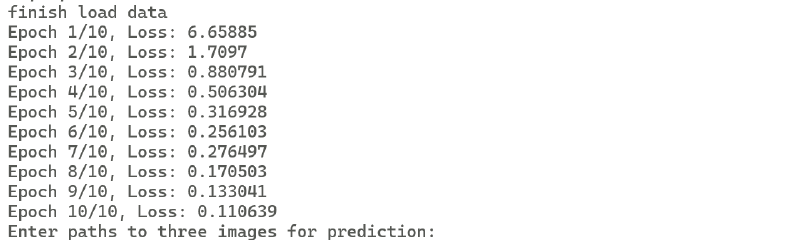

std::cout << "Epoch " << epoch + 1 << "/" << epochs << ", Loss: " << totalLoss / images.size() << std::endl;

//system("pause");

}

}

未完善功能

- 模型保存。

- cuda 加速

- 批量(batch)训练

注

有些函数我就不给了,有些东西总得自己来对吧?

Showcase